What's the Main Bottleneck for AI Adoption?

Everyone talks about the chip shortage. But there's a deeper constraint emerging that will reshape the AI landscape.

This is the second post in the AI Landscape series. If you missed the first one about What outages reveal about AI provider priorities, check it out.

The historical bottleneck

There has been a worldwide shortage of compute for a while now.

When you see all APIs and AI-based services crawling to a standstill, you can feel this shortage. And it’s not about outages, it’s just the day-to-day reality of an AI user: at some time of the day, some services are just impossible to use, especially in their “free” tiers.

NVIDIA/TSMC/AMD/Intel/Samsung/Google/etc. are working hard to increase the available compute power. But you don’t build a chip factory like you build a software company. It’s a long process, and it costs a lot of money. And we need LOTS of compute.

Compute power right now comes mostly from GPUs, and mostly GPUs from NVIDIA. Nvidia GPUs are the developers’ favorites because the hardware is really good, and the software stack is just as good too. Something nobody has except NVIDIA. That’s why they just crossed the $5T market cap mark.

The upcoming bottleneck

While I was preparing this post, Nicolas Steegmann, my co-founder at Stupeflix and today too at Random Walk, sent me this:

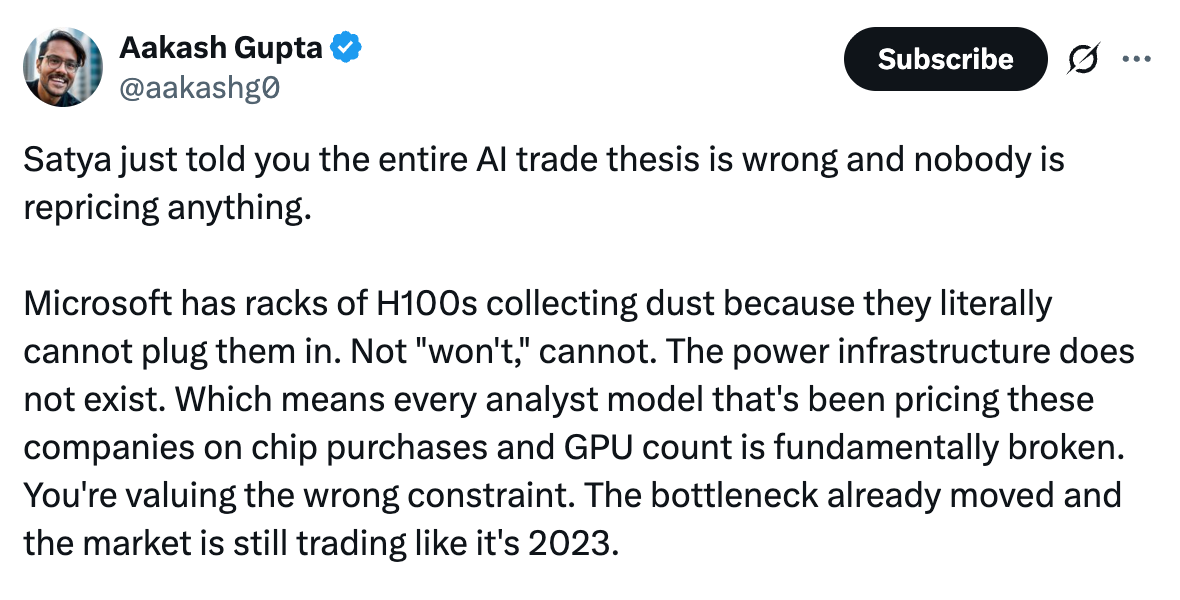

That’s why companies are signing huge deals with energy providers. That’s why companies like OKLO (backed by OpenAI CEO Sam Altman) or SMR are valued at billions of dollars even if they don’t even have a draft of a plan to build a product. And you know what? When your goal is to build nuclear reactors like they do, you should have some plan at some point… And it takes ~ 10 years to build a reactor, while at the same time you can probably scale your chip production by several magnitudes. That shows you how important this second bottleneck is, and of course investors know it.

The signal in the noise

When Anthropic announces their deal with Google in Terawatts instead of Teraflops, that’s not a mistake. That’s a signal.

The fact that the PR announcement measures the deal in Terawatts and not Teraflops is a funny hint of what we just saw: the raw compute power is quickly becoming secondary per se, the primary bottleneck being energy efficiency.

This changes everything. It’s not about who has the most powerful chips anymore. It’s about who can keep them running.

What this means for the AI landscape

And this shift in what matters most is about to shake up the entire AI landscape. When you’re energy-constrained, “good enough and efficient” starts to beat “powerful and hungry.”

So the game is on. The battle behind the scenes right now is increasingly about energy efficiency, not just raw compute power.

Google cloud numbers for 2024 Q3 were very good and Nvidia is going through the roof too. It’s no wonder: once more, there is a worldwide shortage of compute, period. And it may even define for years to come (or forever?) the world we live in: there is no such thing as enough compute.

What’s next

Next time, we will see why Google and Nvidia are the main contenders in this energy-constrained race.